Violent events in recent months have quickly become entangled with misinformation and rumors, illustrating how tragedy and falsehood can feed one another. When closely analyzing the recent events in the U.S., a pattern emerges: acts of violence ignite speculation, speculation fuels conspiracy and misinformation spreads before facts take hold.

Mormon Church Shooting in Michigan

On Sept. 28, an ex-Marine rammed his pickup truck into a Church of Jesus Christ of Latter-day Saints congregation in Grand Blanc Township, Michigan, then opened fire and set the building on fire. At least four people were killed and eight wounded before officers shot the suspect, identified as 40-year-old Thomas Jacob Sanford, police said.

The FBI labeled it an “act of targeted violence.” Sanford, who served in Iraq, died at the scene following a shootout with police. Speculation over Sanford’s political affiliations surfaced almost immediately.

USA Today reported that a 2019 Facebook photo showed him in a Trump 2020 shirt, and a “Trump-Vance” sign was seen at his home after the attack. At the same time, a local city council candidate, Kris Johns, told the Detroit Free Press that Sanford had voiced anti-Mormon views when they spoke days earlier, but had not mentioned politics. Johns described Sanford as “extremely friendly” and said his comments echoed talking points found on social media platforms like YouTube and TikTok.

Michigan Gov. Gretchen Whitmer urged restraint in interpreting Sanford’s actions. “Speculation is unhelpful and it can be downright dangerous,” she said at Sept. 29 press conference after the Grand Blanc shooting.

Conspiracies After Charlie Kirk’s Assassination

Just over two weeks earlier, on Sept. 10, conservative activist Charlie Kirk was assassinated at Utah Valley University. Within hours, artificial intelligence tools contributed to a flood of misinformation. CBS News documented how Grok, X’s chatbot, falsely named individuals as suspects before police identified 22-year-old Tyler Robinson. Grok also contradicted itself about Robinson’s voter registration and even claimed Kirk was still alive after officials confirmed his death.

Other systems also misfired. Google’s AI Overview briefly displayed an innocent man as a suspect, while Perplexity’s X bot described the shooting as “hypothetical” before the company removed it. “It’s not based on fact checking,” said S. Shyam Sundar, director of Penn State’s Center for Socially Responsible Artificial Intelligence. “It’s more based on the likelihood of this event occurring.”

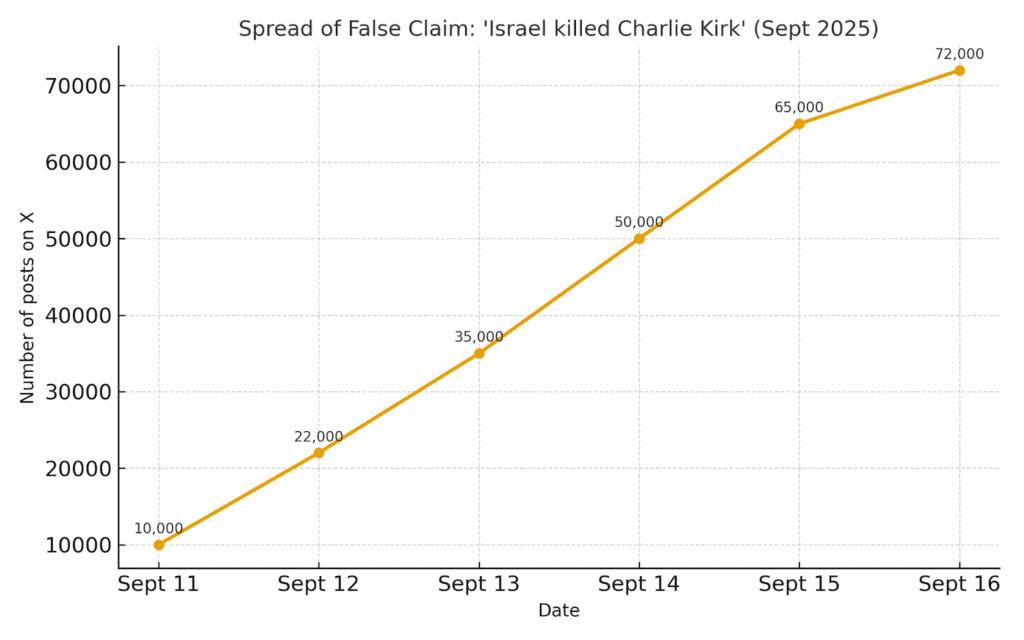

In the days following the assassination, conspiracy theories multiplied. Conservative commentator Candace Owens suggested there were underground tunnels near the crime scene and shared a series of videos on YouTube. One video, titled “They Are Lying About Charlie Kirk,” drew more than 8 million views. Antisemitic narratives blaming Israel grew so widespread that Prime Minister Benjamin Netanyahu publicly denied the claims, calling them a “monstrous big lie.” The Anti-Defamation League reported more than 72,000 posts on X repeating the phrase “Israel killed Charlie Kirk” by Sept. 16.

Michael Bitzer, a professor of politics and history at Catawba College in North Carolina, noted how conspiracy influencers have gained credibility. “What is different today is the spread and the power of these conspiracy influencers to really say whatever they want,” he told the New York Times. “And it becomes accepted by folks to say this is legitimate.”

YouTube Restores Banned Accounts

On Sept. 24, YouTube said it would reinstate creators previously banned for spreading misinformation about COVID-19 and the 2020 election. Alphabet, YouTube’s parent company, said the move reflected its commitment to “free expression” on politically charged issues.

The decision came after pressure from House Judiciary Committee Chairman Jim Jordan and other congressional Republicans who accused the Biden administration of coercing tech companies including YouTube, Meta, and Twitter to suppress speech. The letter from Alphabet’s attorneys to the House Judiciary Committee acknowledged that many conservative influencers had been removed under policies that have since been retired. Among those banned was Dan Bongino, who now serves as deputy director of the FBI.

Shooting at the CDC

Weeks before these events, on Aug. 8, a gunman opened fire at the Centers for Disease Control and Prevention headquarters in Atlanta, killing a police officer before dying from a self-inflicted wound. Authorities identified the shooter as 30-year-old Patrick Joseph White, who had written grievances about COVID-19 vaccines and expressed the belief they had made him sick and depressed. Neighbors told ABC News he often voiced conspiracy-minded complaints about health issues he linked to vaccination.

Investigators recovered nearly 500 shell casings at the scene, with about 200 striking CDC facilities. Former CDC Director Susan Monarez told the agency’s more than 10,000 employees “the dangers of misinformation and its promulgation has now led to deadly consequences” and pledged to rebuild public trust through science and evidence.

A Recurring Pattern

Each of these incidents shows how violence and misinformation have become linked in American public life. A mass shooting or assassination creates an initial vacuum of information. Law enforcement is cautious, details are scarce, and communities are frightened. Into that vacuum rush both accidental errors and deliberate speculation.

This dynamic is called an information cascade: when people, unsure of the facts, rely on what others appear to believe. Once cascades start, they are difficult to reverse, especially on platforms where speed outruns verification.

Social media accelerates this effect. False claims are often more novel or sensational than official updates and therefore travel farther. A 2018 study in Science by MIT researchers Soroush Vosoughi, Deb Roy, and Sinan Aral found that falsehoods on Twitter were 70 percent more likely to be retweeted than factual news, largely because they provoked stronger emotional responses. That pattern was visible after Charlie Kirk’s killing, when AI-generated images and rumors about underground tunnels or foreign governments spread more widely than investigators’ early findings.

In moments of fear, people seek explanations that align with their worldviews, a phenomenon known as confirmation bias. For Kirk’s supporters, theories that tied the shooting to terrorist networks or foreign plots made more sense than a lone gunman. For his critics, suggesting the suspect was a right-wing extremist felt plausible. In Michigan, Sanford’s Trump yard sign became evidence for some, while his anti-Mormon remarks became evidence for others, even as officials stressed the motive was unclear.

Artificial intelligence adds a new layer. Unlike human rumor-mongers, chatbots such as Grok or search engines like Google’s AI Overview generate content with the authority of machines. People often perceive AI as less biased than strangers online, making them more likely to trust errors when they appear authoritative. That misplaced confidence can entrench falsehoods before corrections arrive.

At the CDC, Director Susan Monarez directly linked conspiracy-driven distrust of vaccines to a gunman’s decision to attack a federal health agency. In Kirk’s case, antisemitic theories about Israel gained such traction that a head of state publicly rebutted them. The cycle is clear: violence sparks uncertainty, uncertainty fuels speculation, and speculation spreads misinformation that deepens division and sometimes incites more danger.

References

CBS/AP. “YouTube to start bringing back accounts of creators banned for misinformation.” CBS News, Sept. 24, 2025. https://www.cbsnews.com/news/youtube-accounts-creators-banned-misinformation-covid-19-election/

Fichten, Lauren, and Julia Ingram. “AI fuels false claims after Charlie Kirk’s death, CBS News analysis reveals.” CBS News, Sept. 12, 2025. https://www.cbsnews.com/news/ai-false-claims-charlie-kirk-death/

Fausset, Richard. “After Charlie Kirk’s Assassination, a Bumper Crop of Conspiracy Theories.” New York Times, Sept. 29, 2025. https://www.nytimes.com/2025/09/29/us/charlie-kirk-assassination-conspiracy-theories.html

Kekatos, Mary. “CDC director says misinformation ‘led to deadly consequences’ in campus shooting.” ABC News, Aug. 12, 2025. https://abcnews.go.com/US/suspected-gunman-cdc-shooting-fired-500-rounds-officials/story?id=124577732

Volmert, Isabella, and Corey Williams. “Gunman opens fire at Michigan church and sets it ablaze, killing at least 4 and wounding 8.” Associated Press, Sept. 29, 2025. https://apnews.com/article/mormon-church-shooting-michigan-dcb79ee701b0b8076bf73e30e10ba2b7

Crowley, Kinsey. “Was Thomas Jacob Sanford a Trump supporter? What we know about Michigan church suspect.” USA TODAY, Sept. 29, 2025. https://www.usatoday.com/story/news/politics/2025/09/29/thomas-jacob-sanford-michigan-shooting-trump/86415757007/